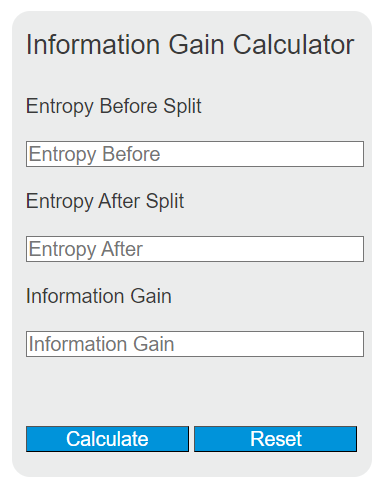

Enter the entropy before and after a split to calculate the information gain. Information gain measures the reduction in entropy or surprise by transforming a dataset and is often used in training decision trees.

Information Gain Formula

The following formula is used to calculate the information gain:

IG = E_{before} - E_{after}Variables:

- IG is the information gain

- E_{before} is the entropy before the split

- E_{after} is the entropy after the split

To calculate the information gain, subtract the entropy after the split from the entropy before the split.

What is Information Gain?

Information gain is a metric used to quantify the reduction in entropy or impurity in a dataset due to the application of a feature or rule. It is a key concept in machine learning and is particularly important in the construction of decision trees, where it is used to select the features that result in the largest gain (or equivalently, the largest reduction in uncertainty about the target variable).

How to Calculate Information Gain?

The following steps outline how to calculate the Information Gain:

- First, determine the entropy before the split (E_{before}).

- Next, determine the entropy after the split (E_{after}).

- Use the formula IG = E_{before} - E_{after} to calculate the Information Gain (IG).

Example Problem:

Use the following variables as an example problem to test your knowledge.

Entropy before the split (E_{before}) = 1.0

Entropy after the split (E_{after}) = 0.5