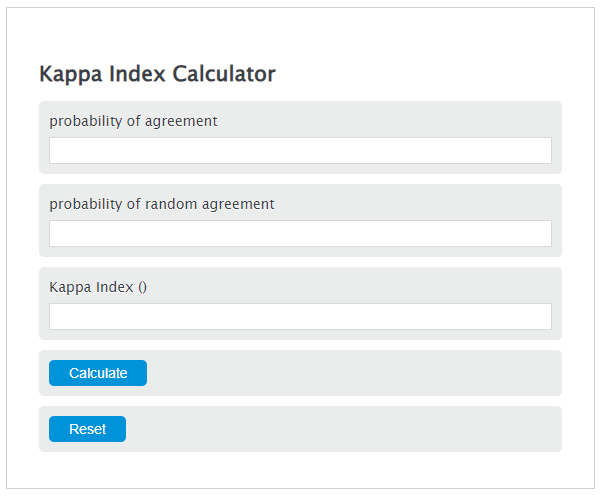

Enter the probability of agreement and the probability of random agreement into the Calculator. The calculator will evaluate the Kappa Index.

Kappa Index Formula

KI = (P0-Pe)/ (1-Pe)

Variables:

- KI is the Kappa Index ()

- P0 is the probability of agreement

- Pe is the probability of random agreement

To calculate Kappa Index, subtract the probability of random agreement from the probability of agreement, then divide the result by the result of 1 minus the probability of random agreement.

How to Calculate Kappa Index?

The following steps outline how to calculate the Kappa Index.

- First, determine the probability of agreement.

- Next, determine the probability of random agreement.

- Next, gather the formula from above = KI = (P0-Pe)/ (1-Pe).

- Finally, calculate the Kappa Index.

- After inserting the variables and calculating the result, check your answer with the calculator above.

Example Problem :

Use the following variables as an example problem to test your knowledge.

probability of agreement = 40

probability of random agreement = 250

FAQs

What is the Kappa Index?

The Kappa Index is a statistical measure used to calculate the level of agreement between two parties or sources beyond chance. It considers both the observed agreement and the probability of random agreement to give a more accurate representation of the true agreement.

Why is the Kappa Index important?

The Kappa Index is important because it provides a more nuanced view of agreement than simple percent agreement calculations. It is widely used in fields such as healthcare, research, and machine learning to assess the reliability of diagnostic tests, raters, and classification models, respectively.

How can the Kappa Index be interpreted?

The Kappa Index can range from -1 to 1, where 1 indicates perfect agreement, 0 indicates the level of agreement that can be expected by random chance, and negative values suggest less agreement than expected by chance. Values between 0.61 and 0.80 indicate substantial agreement, and values between 0.81 and 1.0 indicate almost perfect agreement.

Are there any limitations to using the Kappa Index?

Yes, the Kappa Index has limitations. It can be affected by the prevalence of the outcome, bias in the raters, and the number of categories in the rating system. It’s also important to note that a high Kappa value doesn’t necessarily mean high accuracy, as it measures agreement over chance rather than correctness.