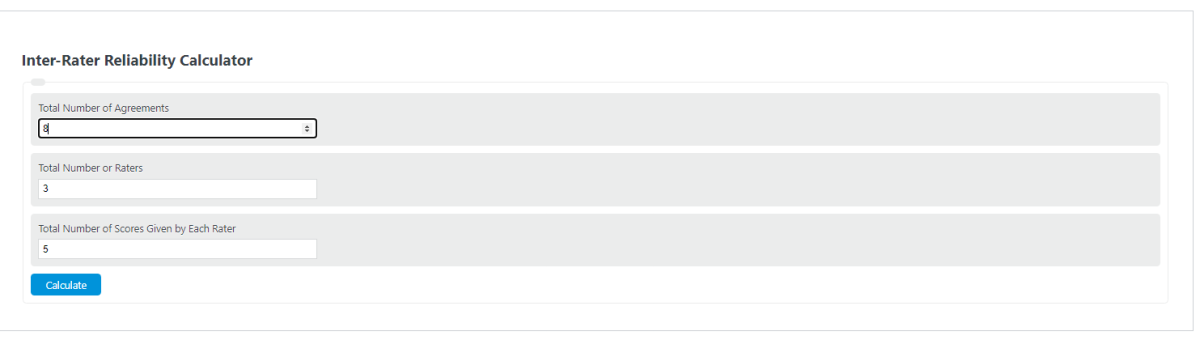

Enter the number of ratings in agreement, the total number of ratings, and the number of raters into the calculator to determine the inter-rater reliability.

- Cronbach Alpha Calculator

- Sigma Level Calculator

- Confidence Interval Calculator (1 or 2 means)

- Average Rating Calculator (Star Rating)

- System Reliability Calculator

Inter-Rater Reliability Formula

The following formula is used to calculate the inter-rater reliability between judges or raters.

IRR = TA / (TR*R) *100

- Where IRR is the inter-rater reliability (%)

- TA is the total number of agreements in the ratings

- TR is the total number of ratings given by each rater

- R is the number of raters

To calculate inter-rate reliability, divide the number of agreements by the product of the number of ratings given by each rater times the number of raters, then multiply by 100.

This formula should be used only in cases where there are more than 2 raters. When there are two raters, the formula simplifies to:

IRR = TA / (TR) *100

Inter-Rater Reliability Definition

Inter-rater reliability is defined as the ratio of the total number of agreements between raters and the total number of ratings. The term is used to describe how often a set of judges or raters agree on a certain score.

Example Problem

How to calculate inter-rater reliability?

First, determine the total number of ratings that were given by the raters. For this example, there were a total of 5 ratings given each by 3 judges, for one contestant.

Next, determine the total number of scores that were in agreement. In this case, the raters agreed on 8 total scores.

Finally, calculate the inter-rater reliability.

Using the formula above, the inter-rate reliability is calculated as:

IRR = TA / (TR*#R) *100

= 8/(3*5)*100

= 53.33 % agreement.